A/B Testing at Title Nine

I established the company’s first-ever A/B testing program as a UX Researcher and Product Manager at Title Nine. My role was responsible for the end to end planning and execution of tests including test design, success evaluation, test result analysis, validation and communication to stakeholders. I managed the roadmap, prioritization and idea generation of all tests and then led the implementation of an optimization plan.

We launched numerous tests since we started the program in 2019, coming up with some big wins and learnings. What I love about this process is how iterative it is, with different versions of tests building upon each other and revealing key insights in a short amount of time.

Here are a few of our most exciting learnings:

Presence of Directory Inline Ads

Background

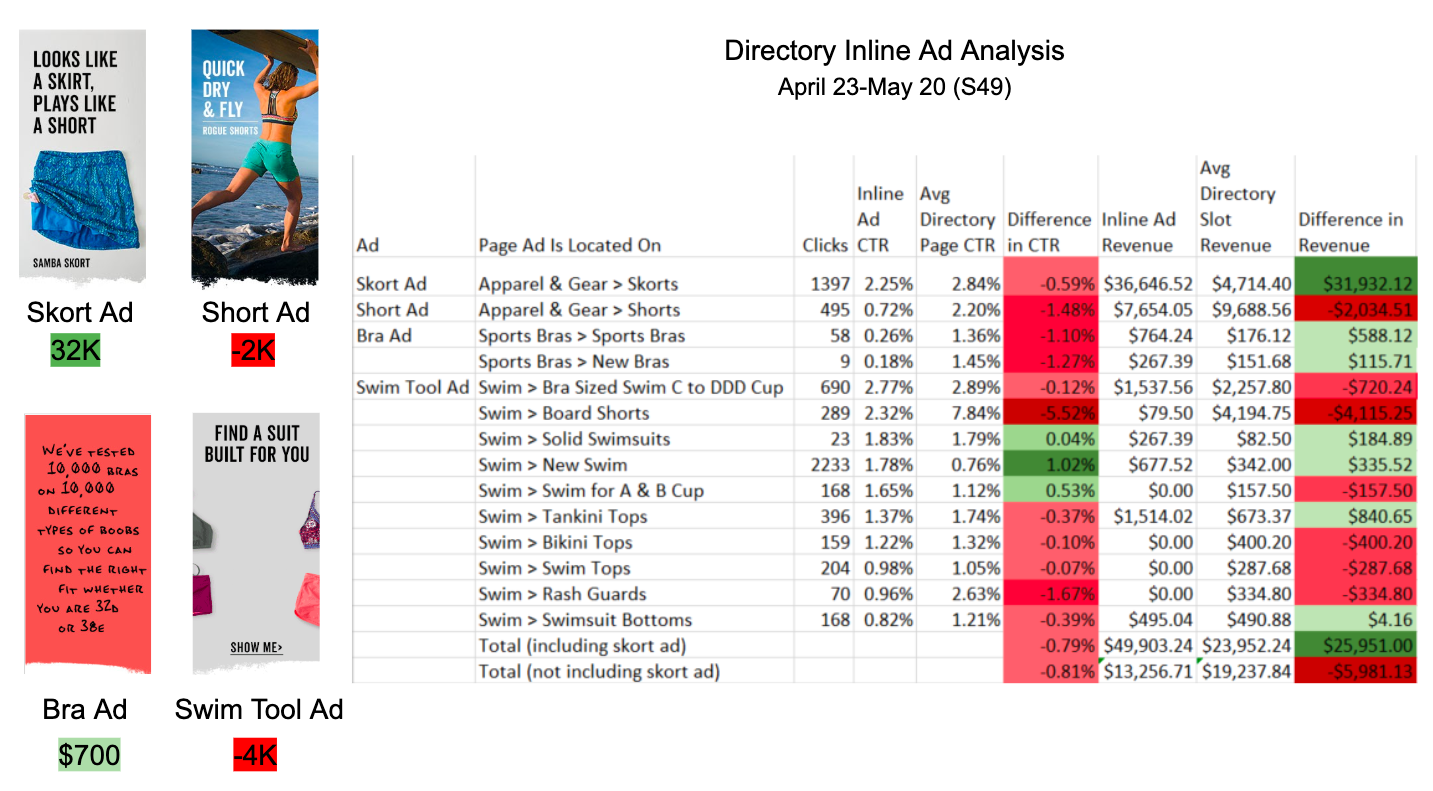

We implemented directory inline ads onto our site in the hopes that the ads would improve conversion rate (CTR) and provide brand lift. However, Google Analytics data limited us to get a full and clear read on revenue and conversion rate. I pulled data comparing ad CTR and revenue vs. if a product were there (Avg directory page CTR & Rev).

While these analytics were helpful, we still needed to see what the impact of the ad was on the whole page. I turned to our A/B testing program for this and designed a test that tested presence with vs. without directory inline ads globally across the site.

Test #1

Hypothesis: Limited by google analytics data, we do not have enough information about our directory inline ad performance. We think the inline ad is taking away from an important selling slot and negatively impacting sales.

Tactic: A/B test presence with directory inline ads vs without across all pages & devices.

Results:

Over the 20 day test, the variation hiding the inline ads made $35,000 more than the variation showing them.

Revenue +4%, Conversion rate +1%

Impact: Given these results favoring not showing inline ads, we took all inline ads off our site. We refined our inline ad strategy to test small and build out to ensure we could be successful at every step. We began version two of our test, testing the presence on one specific ad on one specific page.

Test #2

Hypothesis: While global directory inline ads performed negatively, if we want to narrow our test to a specific ad focusing on features & benefits of a product on only our dresses page, the ads may perform better. This test will allow us to incrementally build up to a more effective overall inline ad strategy.

Tactic: A/B test presence with Swiftsnap ad vs without ad on dresses page & across all devices.

Results:

Over the 14 day test, the variation showing the Swiftsnap inline ad had a higher conversion rate +1%, and increase in revenue +2% vs. not showing the ad.

76% of users scrolled far enough to see the ad, 1.4% of users that saw the ad clicked on it, and 15.4% of users that click on ad, made a purchase.

Impact: Given these favorable results for showing this particular ad, we kept the ad on the site, and proceeded into phase three of the project. We are currently iterating on various other incremental small tests (e.g. positioning of ad on page, incorporating video vs static, content variations, etc.) to get full picture on what is or is not working.

Mobile Shopping Bag - Checkout Button Above The Fold

To start our A/B test program, we felt the largest opportunity we faced was improving our mobile shopping cart, particularly testing putting the checkout button above the fold.

Tactic: Tested mobile checkout button above vs. original UX with button below.

Results:

Conversion rate +6% lift; Avg Products/Visitor +5%

Rev/Visitor +4% lift, Revenue gain over 30 day duration was +$36K, or +$1,000/day

Impact: We proceeded with implementing the test UX onto our live site.

Desktop Shopping Cart Version 1 & 2

Hypothesis: The order summary information gets pushed down to below the fold when multiple items are in cart. If we consolidate the order summary and keep it above the fold, we will increase progress to checkout.

Test #1

Tactic: Tested new order summary column across all breakpoints

Results:

Negative results, but tablet devices appeared to be large factor.

Test #2:

Tactic: Re-tested on desktop breakpoint only.

Results:

Neutral results. Conversion rate & Rev were flat with the test variation vs. original.

Impact: Provided good learnings that spending the resources implementing new order summary column was not worth our time.

Desktop Left Nav List

Hypothesis: On our directory pages, product “Refine By” filters are placed underneath a category link list. This can cause the filters to be pushed significantly far down the page. Users may miss the refinement filters entirely.

Tactic: Remove the category link list on directory pages that have filters.

Results:

Usage of facets increased +90%, but decrease on all funnel metrics. Conversion rate -3%, Rev -5%.

Impact: Kept Original UX given negative testing results.